In today's era of expansive data volumes, AI stands at the forefront of revolutionizing how organizations manage ...

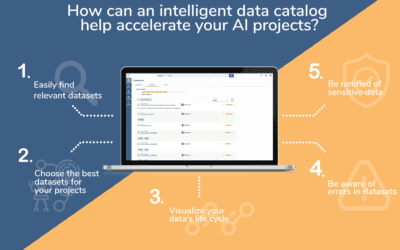

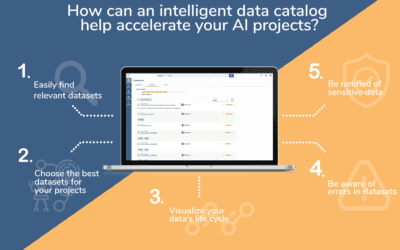

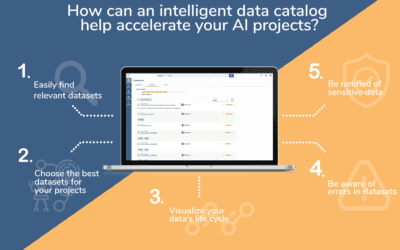

In today's data-driven landscape, organizations increasingly rely on AI to gain insights, drive innovation, and ...

Just as shopping for goods online involves selecting items, adding them to a cart, and choosing delivery and ...

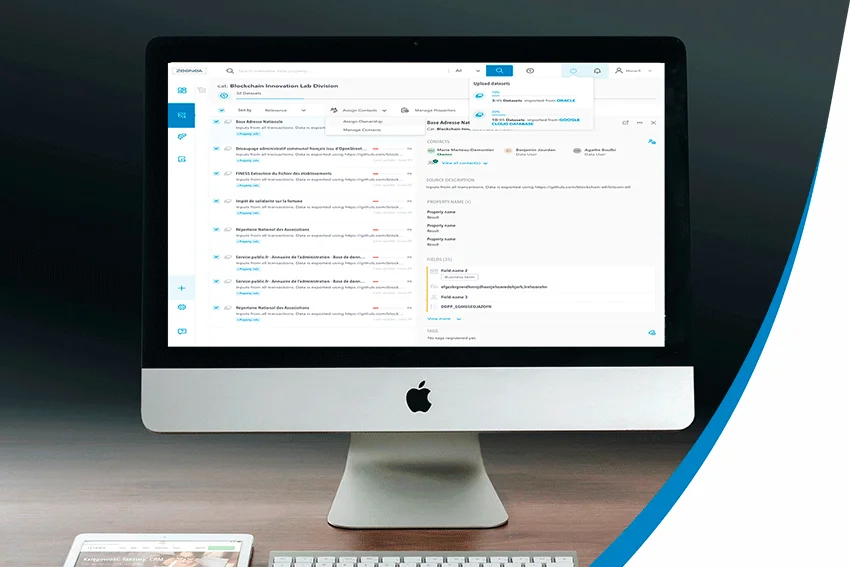

Over the past decade, data catalogs have emerged as important pillars in the landscape of data-driven initiatives. ...

Over the past decade, data catalogs have emerged as important pillars in the landscape of data-driven initiatives. ...

Over the past decade, data catalogs have emerged as important pillars in the landscape of data-driven initiatives. ...

In the ever-evolving data and digital landscape, data sharing has become essential to drive business value. ...

Data Mesh is one of the hottest topics in the data space. In fact, according to a recent BARC Survey, 54% of ...

Over the past decade, Data Catalogs have emerged as important pillars in the landscape of data-driven initiatives. ...

Paris, January 23, 2024 - Zeenea, a leader in metadata management and data discovery solutions, today ignites a ...

2023 was another big year for Zeenea. With more than 50 releases and updates to our platform, these past 12 months ...

Each year, Zeenea organizes exclusive events that bring together our clients and partners from various ...

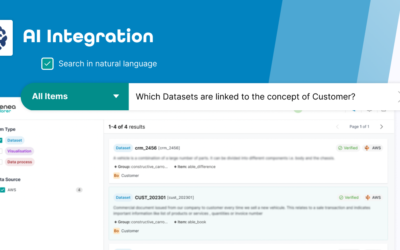

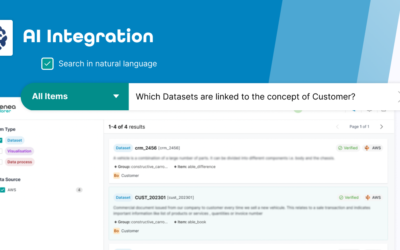

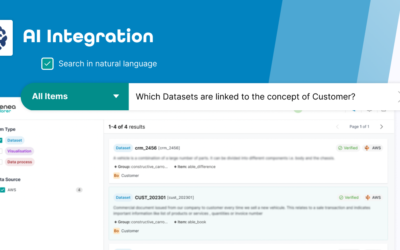

Zeenea is happy to announce the integration of Natural Language Processing (NLP) search capabilities in our Data ...

You've put data at the center of your company's business strategy, but the amount of data you have to handle is ...

Do you have the ambition to turn your organization into a data-driven enterprise? You cannot escape the need to ...

In today's data-driven world, organizations from all industries are collecting vast amounts of data from various ...

You wake up with your heart pounding. Your feet are trembling - Just moments ago you were being chased by ...

Zeenea is a proud sponsor of BARC’s Data Culture Survey 23. Get your free copy here.In last year’s BARC Data ...

You have data - and lots of it. However, it is messy, incomplete, and scattered into several different platforms, ...

Introduction: what is data mesh?

As companies are becoming more aware of the importance of their data, they are ...

Metadata management is an important component in a data management project and it requires more than just the data ...

Metadata management is an important component in a data management project and it requires more than just the data ...

Metadata management is an important component in a data management project and it requires more than just the data ...

Metadata management is an important component in a data management project and it requires more than just the data ...

By implementing a data stewardship program in your organization, you ensure not only the quality of your data but ...

Since the beginning of the 21st century, we’ve been experiencing a true digital revolution. The world is ...

To remain competitive, organizations must make decisions quickly, as the slightest mistake can lead to a waste of ...

Faced with the increase in cyber threats, organizations endure a slew of customer requests for security assurance. ...

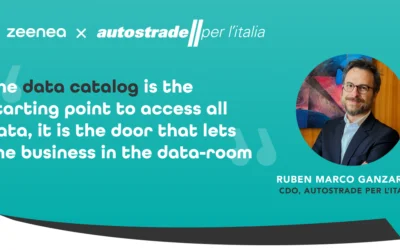

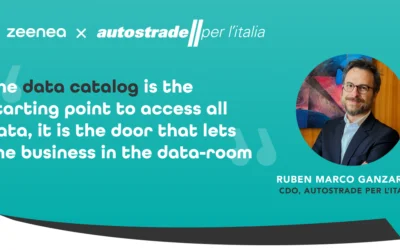

We are pleased to have been selected by Autostrade per l'Italia - a European leader among concessionaires for the ...

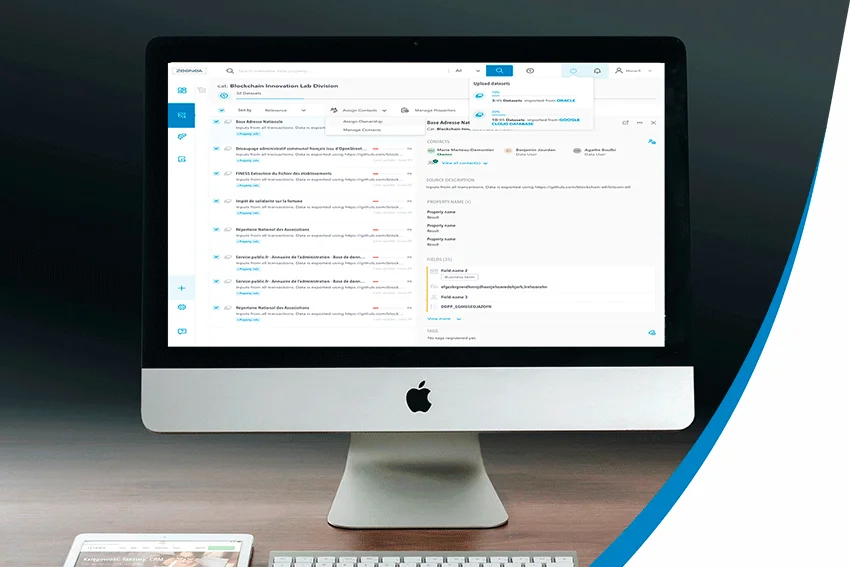

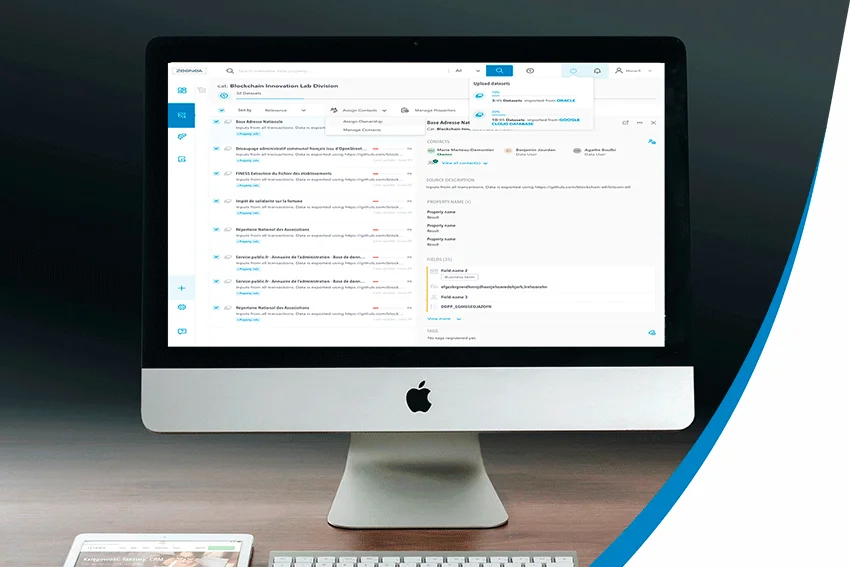

A data catalog harnesses enormous amounts of very diverse information - and its volume will grow ...

A data catalog harnesses enormous amounts of very diverse information - and its volume will grow ...

A data catalog harnesses enormous amounts of very diverse information - and its volume will grow ...

A data catalog harnesses enormous amounts of very diverse information - and its volume will grow ...

A data catalog harnesses enormous amounts of very diverse information - and its volume will grow ...

An organization needs to handle vast volumes of technical assets that often carry a lot of duplicate information ...

On October 20, BARC hosted an interactive webinar with three historical data catalog vendors - Alation, ...

An organization’s data catalog enhances all available data assets by relying on two types of information - on the ...

In our previous article, we broke down Data Lineage by presenting the different lineage typologies (physical ...

As a concept, Data Lineage seems universal: whatever the sector of activity, any stakeholder in a data-driven ...

After starting out as an on-premise data catalog solution, Zeenea made the decision to switch to a fully SaaS ...

In order to access and exploit your data assets on a regular basis, your organization will need to know ...

Your company produces or uses more and more data? To better classify, manage, and give meaning to your data, ...

Having large volumes of data isn’t enough: it’s what you make of it that counts! To make the most out of your ...

The Data Catalog market has developed rapidly, and it is now deemed essential when deploying a data-driven ...

The Data Catalog market has developed rapidly, and it is now deemed essential when deploying a data-driven ...

For the last couple of years, the Zeenea international sales team has been in contact with prospective clients the ...

The Data Catalog market has developed rapidly, and it is now deemed essential when deploying a data-driven ...

The Data Catalog market has developed rapidly, and it is now deemed essential when deploying a data-driven ...

In this new era of information, new terms are used in organizations working with data: Data Management Platform, ...

The Data Catalog market has developed rapidly, and it is now deemed essential when deploying a data-driven ...

The Data Catalog market has developed rapidly, and it is now deemed essential when deploying a data-driven ...

The Data Catalog market has developed rapidly, and it is now deemed essential when deploying a data-driven ...

Regardless of the business sector, data management is a key strategic asset for companies. This information is key ...

Digital transformation has become a priority in organizations' business strategies and manufacturing industries ...

How can you benefit from a Machine Learning Data Catalog?

You can use Machine Learning Data Catalogs (MLDCs) to ...

Knowledge graphs have been interacting with us for quite some time. Whether it be through personalized shopping ...

The term "smart data catalog" has become a buzzword over the past few months. However, when referring to something ...

Data lakes offer an unlimited storage for data and present lots of potential benefits for data scientists in the ...

In today’s world, Big Data environments are more and more complex and difficult to manage. We believe that Big ...

There are many solutions on the data catalog market that offer an overview of all enterprise data all thanks to ...

Data has become one of the main drivers for innovation for many sectors.And as data continues to rapidly ...

The explosion of data sources in organizations, the heterogeneity of data or even the new demands related to data ...

In metadata management, we often talk about data dictionaries and business glossaries. Although they might ...

The use of massive data by the internet giants in the 2000s was a wake-up call for enterprises: Big Data is a ...

According to a Gartner study presented at the Data & Analytics conference in London 2019, 90% of large ...

Can machines think? We are talking about artificial intelligence, “the biggest myth of our time”!A simple ...

A data catalog is a portal that brings metadata on collected data sets together by the enterprise. This ...

Dealing with large volumes of data is essential to any organization's success. But knowing what kind of data it ...

Data stewards are the first point of reference for data and serve as an entry point for data access. They have ...

It is no secret that the enormous volumes of information that companies generate require the right tools ...

The arrival of Big Data did not simplify how enterprises work with data. The volume, the variety, and the ...

Data lineage is defined as the life cycle of data: its origin, movements, and impacts over time. It offers ...

Data lineage is defined as a type of data life cycle. It is a detailed representation of any data over ...

![[SERIES] Data Shopping Part 2 – The Zeenea Data Shopping Experience](https://zeenea.com/wp-content/uploads/2024/06/zeenea-data-shopping-experience-blog-image-400x250.png)

![[SERIES] Building a Marketplace for Data Mesh Part 3: Feeding the Marketplace via domain-specific data catalogs](https://zeenea.com/wp-content/uploads/2024/06/iStock-1418478531-400x250.jpg)

![[SERIES] Building a Marketplace for Data Mesh Part 2: Setting up an enterprise-level marketplace](https://zeenea.com/wp-content/uploads/2024/06/iStock-1513818710-400x250.jpg)

![[SERIES] Building a Marketplace for data mesh Part 1: Facilitating data product consumption through metadata](https://zeenea.com/wp-content/uploads/2024/05/iStock-1485944683-400x250.jpg)

![[Press Release] Zeenea launches its Enterprise Data Marketplace, revolutionizing Data Product Management](https://zeenea.com/wp-content/uploads/2024/01/launch-enterprise-data-marketplace-400x250.png)

![[SERIES] Data Shopping Part 2 – The Zeenea Data Shopping Experience](https://zeenea.com/wp-content/uploads/2024/06/zeenea-data-shopping-experience-blog-image-400x250.png)

![[SERIES] Building a Marketplace for Data Mesh Part 3: Feeding the Marketplace via domain-specific data catalogs](https://zeenea.com/wp-content/uploads/2024/06/iStock-1418478531-400x250.jpg)

![[SERIES] Building a Marketplace for Data Mesh Part 2: Setting up an enterprise-level marketplace](https://zeenea.com/wp-content/uploads/2024/06/iStock-1513818710-400x250.jpg)

![[SERIES] Building a Marketplace for data mesh Part 1: Facilitating data product consumption through metadata](https://zeenea.com/wp-content/uploads/2024/05/iStock-1485944683-400x250.jpg)

![[Press Release] Zeenea launches its Enterprise Data Marketplace, revolutionizing Data Product Management](https://zeenea.com/wp-content/uploads/2024/01/launch-enterprise-data-marketplace-400x250.png)

![[SERIES] Data Shopping Part 2 – The Zeenea Data Shopping Experience](https://zeenea.com/wp-content/uploads/2024/06/zeenea-data-shopping-experience-blog-image-400x250.png)

![[SERIES] Building a Marketplace for Data Mesh Part 3: Feeding the Marketplace via domain-specific data catalogs](https://zeenea.com/wp-content/uploads/2024/06/iStock-1418478531-400x250.jpg)

![[SERIES] Building a Marketplace for Data Mesh Part 2: Setting up an enterprise-level marketplace](https://zeenea.com/wp-content/uploads/2024/06/iStock-1513818710-400x250.jpg)

![[SERIES] Building a Marketplace for data mesh Part 1: Facilitating data product consumption through metadata](https://zeenea.com/wp-content/uploads/2024/05/iStock-1485944683-400x250.jpg)

![[Press Release] Zeenea launches its Enterprise Data Marketplace, revolutionizing Data Product Management](https://zeenea.com/wp-content/uploads/2024/01/launch-enterprise-data-marketplace-400x250.png)