Data Quality refers to an organization’s ability to maintain the quality of its data in time. If we were to take some data professionals at their word, improving Data Quality is the panacea to all our business woes and should therefore be the top priority.

At Zeenea, we believe this should be nuanced: Data Quality is a means amongst others to limit the uncertainties of meeting corporate objectives.

In this series of articles, we will go over everything data professionals need to know about Data Quality Management (DQM):

- The nine dimensions of Data Quality

- The challenges and risks associated with Data Quality

- The main features of Data Quality Management tools

- The Data Catalog contribution to DQM

Some definitions of Data Quality

Asking Data Analysts or Data Engineers for a definition of Data Quality will provide you with very different answers – even within the same company, amongst similar profiles. Some, for example, will focus on the unity of data, while others will prefer to reference standardization. You may yourself have your own interpretation.

The ISO 9000-2015 norm defines quality as “the capacity of an ensemble of intrinsic characteristics to satisfy requirements”.

DAMA International (The Global Data Management Community) – a leading international association involving both business and technical data management professionals – adapts this definition to a data context: “Data Quality is the degree to which the data dimensions meet requirements.”

The dimensional approach to Data Quality

From an operational perspective, Data Quality translates into what we call Data Quality dimensions, in which each dimension relates to a specific aspect of quality.

The 4 dimensions most often used are generally completeness, accuracy, validity, and availability. In literature, there are many dimensions and different criteria to describe Data Quality. There isn’t however any consensus on what these dimensions actually are.

For example, DAMA enumerates sixty dimensions – when most Data Quality Management (DQM) software vendors usually offer up five or six.

The nine dimensions of Data Quality

At Zeenea, we believe that the ideal compromise is to take into account nine Data Quality dimensions: completeness, accuracy, validity, uniqueness, consistency, timeliness, traceability, clarity, and availability.

We will illustrate these nine dimensions and the different concepts we refer to in this publication with a straightforward example:

Arthur is in charge of sending marketing campaigns to clients and prospects to present his company’s latest offers. He encounters, however, certain difficulties:

- Arthur sometimes sends communications to the same people several times,

- The emails provided in his CRM are often invalid,

- Prospects and clients do not always receive the right content,

- Some information pertaining to the prospects are obsolete,

- Some clients receive emails with erroneous gender qualifications,

- There are two addresses for clients/prospects but it’s difficult to understand what they relate to,

- He doesn’t know the origin of some of the data he is using or how he can access their source.

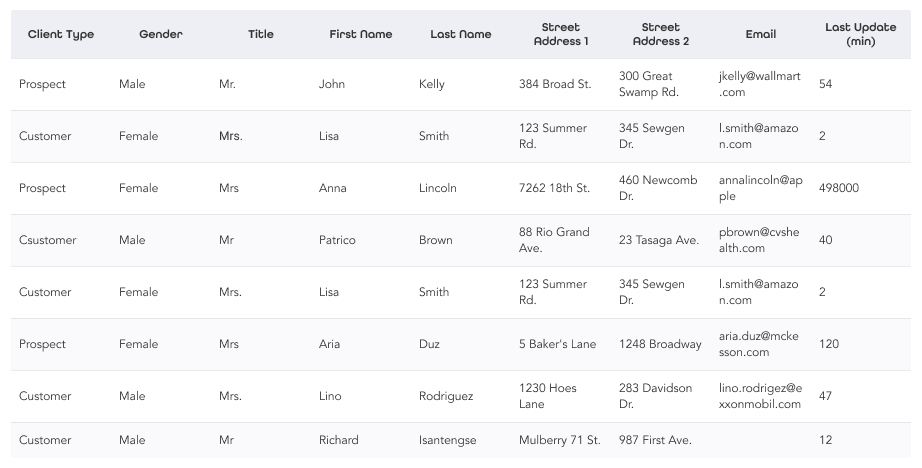

Below is the data Arthur has at hand for his sales efforts. We shall use them to illustrate each of the nine dimensions of Data Quality:

1. Completeness

Is the data complete? Is there information missing? The objective of this dimension is to identify the empty, null, or missing data. In this example, Arthur notices that there are missing email addresses:

To remedy this, he could try and identify whether other systems have the information needed. Arthur could also ask data specialists to manually insert the missing email addresses.

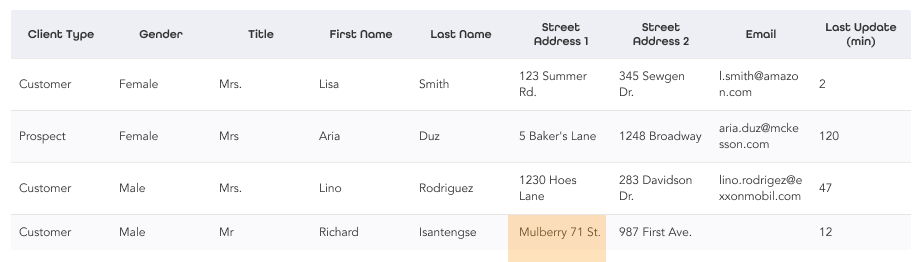

2. Accuracy

Are the existing values coherent with the actual data, i.e., the data we find in the real world?

Arthur noticed that some letters sent to important clients are returned because of incorrect postal addresses. Below, we can see that one of the addresses doesn’t match the standard address formats in the real world:

It could be helpful here for Arthur to use postal address verification services.

3. Validity

Does the data conform with the syntax of its definition? The purpose of this dimension is to ensure that the data conforms to a model of a particular rule.

Arthur noticed that he regularly gets bounced emails. Another problem is that certain prospects/clients do not receive the right content because they haven’t been accurately qualified. For example, the email address annalincoln@apple isn’t in the correct format and the Client Type Csutomer isn’t correct.

To solve this issue, he could for example make sure that the Client Type values are part of a list of reference values (Customer or Prospect) and that email addresses conform to a specific format.

4. Consistency

Are the different values of the same record in conformity with a given rule? The aim is to ensure the coherence of the data between several columns.

Arthur noticed that some of his male clients complain about receiving emails in which they are referred to as Miss. There does appear to be an incoherence between the Gender and Title columns for Lino Rodrigez.

To solve these types of problems, it is possible to create a logical rule that ensures that when the id Gender is Male, the title should be Mr.

5. Timeliness

Is the time lapse between the creation of the data and its availability appropriate? The aim is to ensure the data is accessible in as short a time as possible.

Arthur noticed that certain information on prospects is not always up to date because the data is too old. As a company rule, data on a prospect that is older than 6 months cannot be used.

He could solve this problem by creating a rule that identifies and excludes data that is too old. An alternative would be to harness this same information in another system that contains fresher data.

6. Uniqueness

Are there duplicate records? The aim is to ensure the data is not duplicated.

Arthur noticed he was sending the same communications several times to the same people. Lisa Smith, for instance, is duplicated in the folder:

In this simplified example, the duplicated data is identical. More advanced algorithms such as Jaro, Jaro-Winkler, or Levenshtein, for example, can regroup duplicated data more accurately.

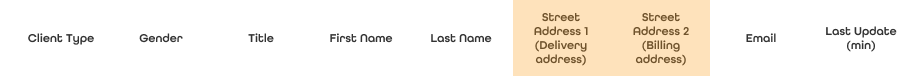

7. Clarity

Is understanding the metadata easy for the data consumer? The aim here is to understand the significance of the data and avoid interpretations.

Arthur has doubts about the two addresses given as it is not easy to understand what they represent. The names Street Address 1 and Street Address 2 are subject to interpretation and should be modified, if possible.

Renaming within a database is often a complicated operation and should be correctly documented with at least one description.

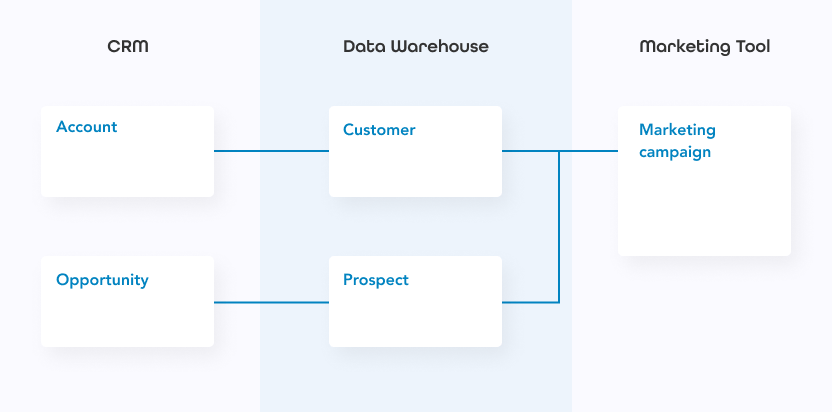

8. Traceability

Is it possible to obtain traceability from data? The aim is to get to the origin of the data, along with any transformations it may have gone through.

Arthur doesn’t really know where the data comes from or where he can access the data sources. It would have been quite useful for him to know this as it would have ensured the problem was fixed at the source. He would have needed to know that the data he is using with his marketing tool originates from the data of the company data warehouse, itself sourced from the CRM tool.

9. Availability

How can the data be consulted or retrieved by the user? The aim is to facilitate access to the data.

Arthur doesn’t know how to easily access the source data. Staying with the previous schema, he wants to effortlessly access data from the data warehouse or the CRM tool.

In some cases, Arthur will need to make a formal request to access this information directly.

Get our Data Quality Management guide for data-driven organizations

For more information on Data Quality and DQM, download our free guide: “A guide to Data Quality Management” now!