As early as 2019, the concept of a Data Fabric was identified by Gartner as a major technological trend for 2022. Behind this buzzword lies an important objective: to maximize the value of your data and accelerate your digital transformation. Discover how by following this guide!

Bringing order to your data is the promise of a Data Fabric. However, it is not merely a solution for organizing or structuring information. In fact, a Data Fabric is a tool designed to give value to your data. Indeed, the volume of data generated by companies is growing exponentially. Every second, there are more and more data to exploit that enables organizations to be more efficient, more in tune with their market, or with their customers. The figures speak for themselves: IDC estimates that by 2025, the volume of data generated globally will reach 175 zettabytes. A volume that is so large that, if stored on Blu-ray, it would represent a stack of discs 23 times the distance from the Earth to the Moon!

What is Data Fabric?

Gartner defines Data Fabric as “a design concept that acts as an integrated layer of data and connection processes.” In other words, a Data Fabric continuously analyzes combinations of existing, accessible, and inferred metadata assets to provide smarter information and support data management tasks more efficiently. A Data Fabric then uses all of this metadata analysis to design new processes and establish standardized access to data for all business profiles within the enterprise: application developers, analysts, data scientists, etc.

A Data Fabric is therefore a series of processes that read, capture, integrate, and deliver data based on the understanding of who is using the data, the classification of usage types, and the monitoring of changes in data usage patterns.

The benefits of a Data Fabric for enterprises

Gartner explains that by 2024, the deployment of Data Fabrics within organizations will quadruple the efficiency of data exploitation while reducing by half the data management tasks performed by humans. In this sense, the institute identifies three main areas of opportunity brought by a Data Fabric:

- A 70% reduction in data discovery, analysis and integration tasks for data teams;

- The increase in the number of data users, by reusing data for a greater number of use cases;

- The ability to get more out of more data by significantly accelerating the introduction and exploitation of secondary and third-party data.

From a technological standpoint, a Data Fabric adapts to the tools already in place within an organization. It can evolve from existing integration and quality tools, data management, and governance platforms (such as a data datalog, for example – we’ll come back to this). In this sense, its design model is ideal since it uses your existing technology while pursuing a strategic shift in your overall data management.

Finally, a Data Fabric helps companies break down data silos. You can then reduce the cost and effort of your data teams who must constantly merge, recast, and redeploy data management silos with new silos.

The contribution of a data catalog to a Data Fabric

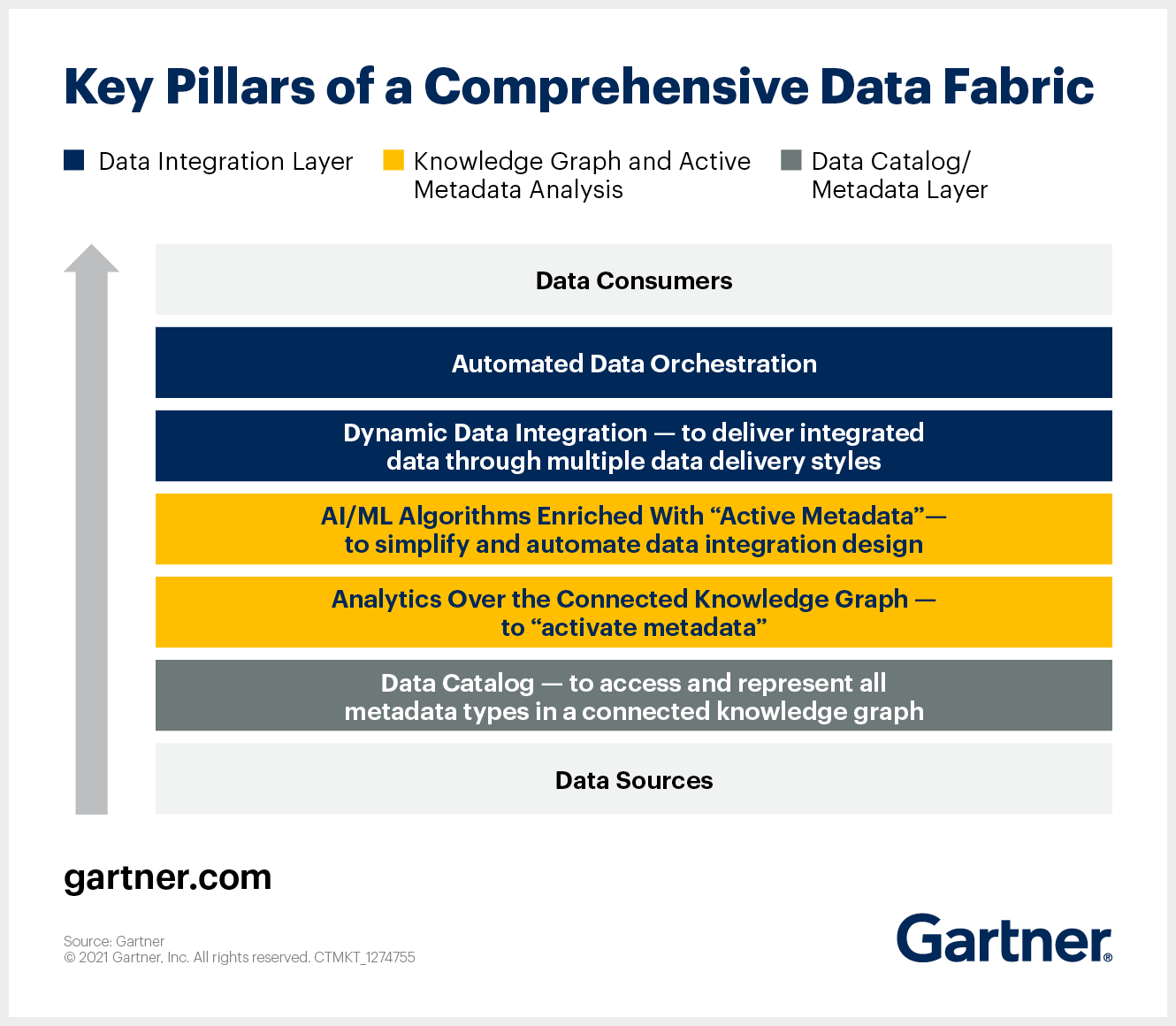

If we take the notion of “integrated layer” from the definition of a Data Fabric as well as the diagram proposed by Gartner (below) as a guide, we observe that a data catalog plays a fundamental part in the constitution of a Data Fabric. Indeed, it influences the higher layers that form an efficient Data Fabric.

Layer #1 – Access to all types of metadata

A data catalog is the foundation of a Data Fabric structure – it is the first (gray) layer. It supports the identification, collection, and analysis of all data sources and all types of metadata. The data catalog is a starting point for a Data Fabric.

Layer #2 – Metadata Enablement and the Knowledge Graph

In the second layer of a Data Fabric (yellow), Garner focuses on metadata activation. This activation involves the continuous analysis of metadata to calculate key indicators. This analysis is facilitated by the use of artificial intelligence (AI), machine learning (ML), and automated data integration.

The patterns and connections detected are then fed back into the data catalog and other data management tools to make recommendations to the people and machines involved in data management and integration. This requires continuous analysis from a connected knowledge graph – the means to create and visualize existing relationships between data assets of different types, to make business sense of them, and to make this set of relationships easy to discover and navigate by all users in the organization.

Layer #3 – Dynamic Data Integration

Gartner’s third layer (blue) primarily addresses the technical consumers of data in organizations. This layer of the Data Fabric refers to the need to prepare, integrate, explore, and transform data. The challenge here is to make data assets from a wide range of tools accessible to a wide range of business users. The keywords here are flexibility and compatibility to break down data silos, with the following features:

- A data permissions set management system: the Data Fabric must automate access by the user;

- Automated provisioning: Anyone in the organization should be able to request access to a dataset from the Data Fabric – via ticket creation with built-in data governance capabilities.

- A data exploration tool: The Data Fabric should allow users to explore data (not just metadata) without having to leave the fabric.

Automated data orchestration – as described in the top part of this third layer of the diagram – refers to DataOps. It is a collaborative data management practice aimed at improving the communication, integration, and automation of data flows between data managers and data consumers within an organization. You can read more about it in this article.

Is there a single tool for implementing a Data Fabric?

As Gartner points out, there is no single tool that supports all layers of the fabric in a comprehensive manner. In this sense, no single vendor is able to offer a data structure that can be equated to a complete Data Fabric. The solution lies in the interaction between the different layers. An open platform is the key, and companies need to equip themselves with the best, interconnected data tools to achieve a Data Fabric worthy of its name. Building a Data Fabric should be viewed as a marathon, not a sprint, and approached in stages – the data catalog being the first.

Building a Data Fabric with Zeenea

At Zeenea, the companies that have adopted our Smart Data Catalog have already laid the foundations of their Data Fabric. Indeed, in addition to the identification, collection, and analysis of all data sources as well as all types of metadata (first layer), Zeenea offers all the features necessary for the activation of metadata via its core – via a knowledge graph (second layer). Finally, our catalog addresses the third layer: on the one hand, via the integration of data governance rules; on the other hand, via the Zeenea Explorer application which acts as a true data marketplace so that each business user can easily access the key datasets that interest them thus, quickly creating value from the available data.

To learn more about our Smart Data Catalog, please consult our two eBooks below or contact us: